week4

Links

There are a large number of web sites that discuss aspects of

acoustic space modelling and room simulation (a web search for

"room acoustic simulation" will bring yield a range of interesting

sites). Here are several just for the heck of it:

- Waveguide Mesh Modelling -- a fairly new site with some links to

other mesh-oriented modelling efforts and discussions [note:

this is like the finite-element simulation project we

described in class.]

- IRCAM Room Acoustics Team

-- IRCAM has been involved in advanced room acoustic modelling

research for several years

- a good set of links

to various other sites, including links for

Head-Related Transfer Function measurements

Francisco discussed convolution as a methodology for recreating the

acoustics of specific spaces. Several people were interested in

sites that allowed the downloading of impulse response

soundfiles/data:

We realized our convolution examples in class using Tom Erbe's marvelous

program

soundhack

Code

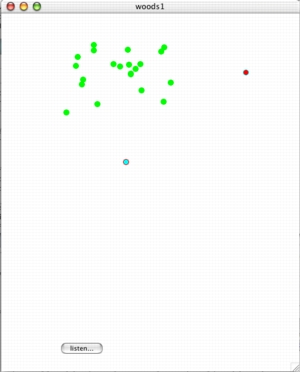

- woods.pat.sit

-- Max/MSP patch of woods-reflection simulation with

fixed source/listener, editable tree locations. Source

sound is from real-time input (mono output)

- woods1.tar.gz

-- the RTcmix/wxWindows woods-reflection simulation, source

sound from soundfile. Editable source and listener location

(stereo output)

- spatical1.tar.gz

-- goofy real-time spatializer, RTcmix/wxWindows positioning

of soundfile playback.

In addition to these, we also explored several extant room-simulation

programs:

- PLACE

-- Doug Scott's ray-tracing/spatial reverb matrix instrument

for stationary sources

- MOVE

-- Doug Scott's ray-tracing/spatial reverb matrix instrument

for moving sources

- SROOM

-- F. R. Moorer's original "inner room" spatial simulator for

stationary sources

- MROOM

-- F. R. Moorer's original "inner room" spatial simulator for

moving sources

- FREEVERB

-- a very high-quality, public domain reverberator

- REV

-- three older reverberation algorithms from CCRMA at Stanford

University

- REVERBIT

-- the original Schroeder comb/allpass filter reverb

moving sources

for stationary sources

Following on our discussion of outdoor space/acoustic reflection

simulation from

last week,

we investigated additional approaches to room and space simulation.

Reflections Through Trees

Simple physics gives us the ability to recreate ideal paths that sound takes

when reflected by a set of perfect point-source reflectors (virtual trees!).

All we need top do is to calculate the distance from the source of

the sound to each tree, and the distance from that tree to the listener.

This distance can be used to compute the delay time for each tree-reflected

path (assuming sound travelling at 1100 feet/second) and the degree

of amplitude attenuation due to distance (amplitude decreases at

a rate of 1/distance2).

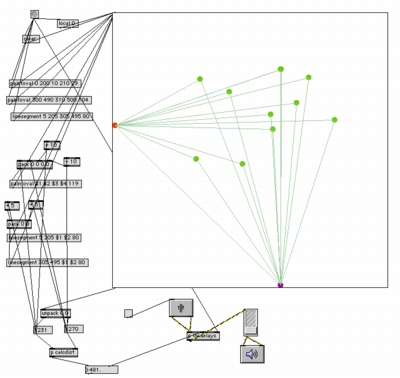

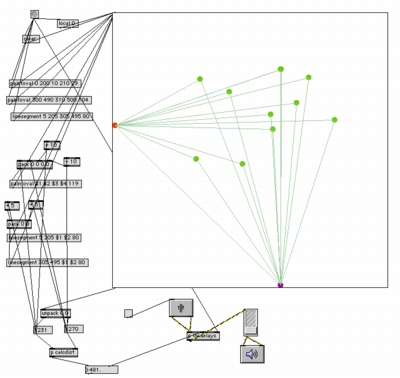

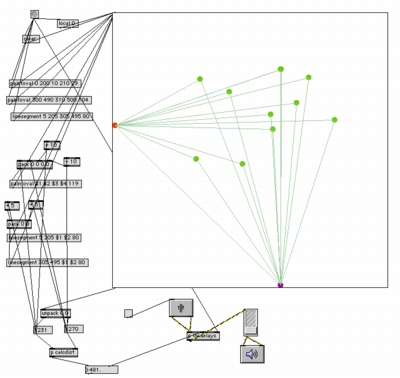

Using this information, we showed a very simple Max/MSP patch that

allows us to place 10 virtual trees relative to a sound source and

listener. The patch accepted real-time input and recreated the delay

and amplitude pattern for a given set of trees.

The problem with this patch is that it didn't capture the 'spatial'

aspect of sound being reflected through a stand of trees. For this,

we need to compute stereo delays, one to each virtual ear of the

virtual listener. Our ears decode directionality using amplitude

cues and timing cues, so our simple model can be extended quite

easily just by computing two sound paths (one left, one right)

between the source and listener.

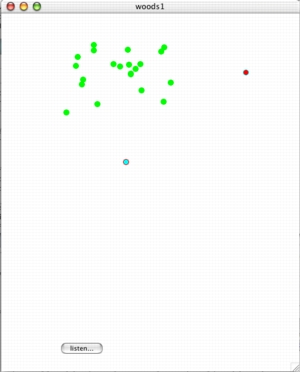

We showed an RTcmix/wxWindows model that did this, using a soundfile

(that happy barking Loocher dog) as source. This time we added

a feature allowing us to move the source and the listener, as

well as setting up the stand of trees randomly within a selected

area. This made it easy to explore a range of different physical

layouts and corresponding reflection patterns.

Room Simulation, Spatializing

The problem with these models is that they ignore secondary, tertiary

and additional n-ary reflections that occur between the

trees. Contemporary room and space simulation software attempts

to model these using a set of short, recirculating delay-lines

to create a 'diffuse' sound following an initial set of

reflections coming from the walls of a room. We showed existing

software that demonstrated the effectiveness of this approach,

and also discussed the notion of an "inner room" for speaker-playback

simulations (as opposed to headphone, binaural simulations).

We had described earlier in the class the concept of performance

spatializing, or diffusion that is fairly popular

in European concerts. About the closest we get to this is in THX-like

movie theaters, where the attacking spacecraft always approaches

from behind the audience. We then built a very crude stereo "spatializer"

using Doug Scott's advanced room simulation code -- we could 'move'

the soud image around by directing our mouse cursor around a modelled

listener location. It didn't really work all that well...

Convolution

Francisco ended the class with a discussion of convolution as a room

simulation technique. Convolution is a way of multiplying the

frequency and time characteristics of two signals together in such

a fashion that it sounds as if one signal was "imbedded" on the

other. By using an impulse response signal of a particular space,

we can "imbed" an arbitrary input signal (our voice, a recording of

something else, etc.) in a measured room response and make it

sound as if the input signal were inside the room. It is a very

effective technique used in many popular signal-processing

plugins (Altiverb, Waves, etc.), although it is not as flexible in

theory as a room or spatial model. You simply haven't lived a complete

audio life until you've heard your voice in a room 2 feet wide and

2000 feet long.

Finite Element Simulation

We forgot to cover this, so we're going to do it

next week. We'll also play some

more examples of convolved sound.