Multi-Lingual Max/MSP

This is perhaps an unusual article for Cycling '74 to put on

their web site, because it has to do with all the languages that

Max/MSP isn't. I have to confess that I'm not really a

very good Max-user. I know only a handful of objects, and

I am of the text-based generation that is still a little, um,

"graphical-user-interface-challenged". I basically use Max/MSP

only as a window onto computer music languages that I am adept at

using. More and more, though, I am also using Max as base platform

for connecting these languages to each other. One of the really

great decisions that the Cycling '74 design team made was to

make the development and integration of external objects

relatively easy. This has been a real boost for the classes

that I teach at Columbia University, and it has also allowed me much

more creative freedom in my compositional work.

I had planned to present a paper/demo at the

2007 Spark Festival

in Minneapolis, MN. However, snow. Icy precipitation.

Two feet of this stuff was on the way. I rebooked my flight

to return to the cozy warmth and safety of New York. I was bemoaning

the fact that I would have to miss the paper session to Andrew Pask

and Gregory Taylor at breakfast the day I was now leaving, and Andrew

said (you have to imagine an early-morning Kiwi accent): "Hey mate,

why don't you write it all up for our web site?"

The Challenge

[NOTE: The following text is written from a Mac OSX perspective,

but the concepts should be equally applicable for Windows

users. Obviously the externals I discuss will need to be installed

to run the patches (a list of links is at the end of this article).

A few of the externals I describe below aren't yet

available for Windows, but soon!]

A lot of my work involves developing compositional algorithms using

script-based languages such as

RTcmix

or

ChucK.

I also employ the timbral capabilities imbedded in these languages

as well as languages like

CSound,

JSyn

and

SuperCollider.

In addition to all this, I've come to appreciate the data structuring

and control capabilities

available through a conceptually-developed language such as

JMSL

or a higher-level "pure" computer language like

Lisp.

Finally, I have discovered the joys of connecting the audio flowing

from all these snazzy environments together using applications

such as Cycling's own

Soundflower/Soundflowerbed.

I wondered, what would it be like if I set out to create a patch

using all of these languages at once?

The Work

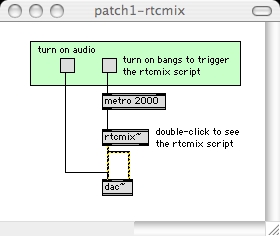

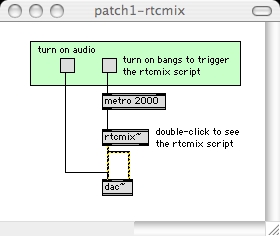

I decide to start my computer music language extravaganza with

a randomly-shifting cluster of sawtooth

waveform notes. Because I know RTcmix well, I choose the Max/MSP

instantiation of the language,

[rtcmix~]

to create this effect. (see

patch1-rtcmix.pat

for this initial sawtooth foray)

patch1-rtcmix.pat

(By the way, this patch represents

about 96% of my entire Max/MSP knowledge and capabilities...)

In this article,

I won't go into the details of how each of these languages

work. There is a lot of on-line documentation for those

interested in learning more. Plus with a little downloading

you can hear for yourself how these demo patches function.

And hear them I do! Sawtooth waves get really annoying after awhile.

Just ask my wife and kids.

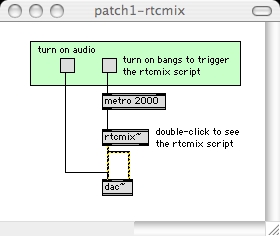

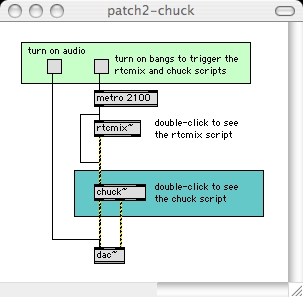

What to do with this annoyance? Filter it! There exist many many

ways of using digital filters in Max/MSP, but some of the more interesting

filters are included in the ChucK language, available in Max/MSP

as the

[chuck~]

external.

(see

patch2-chuck.pat)

I send the sound output of [rtcmix~] through a simple biquad

filter in ChucK. The center frequency of the filter is controlled

from the Max/MSP environment.

patch2-chuck.pat

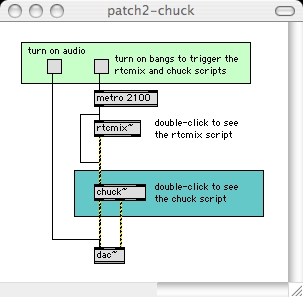

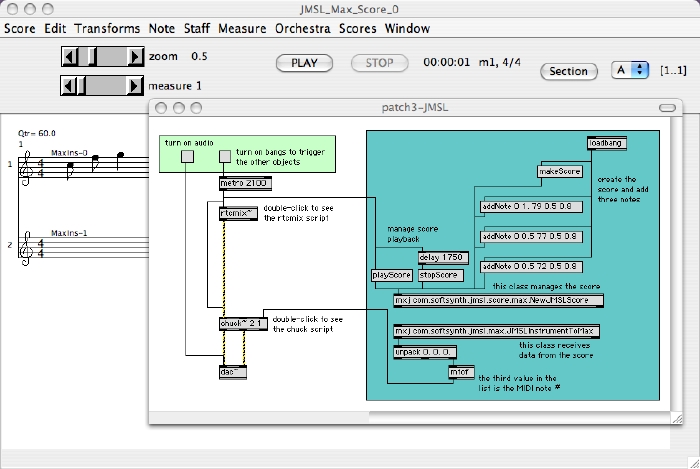

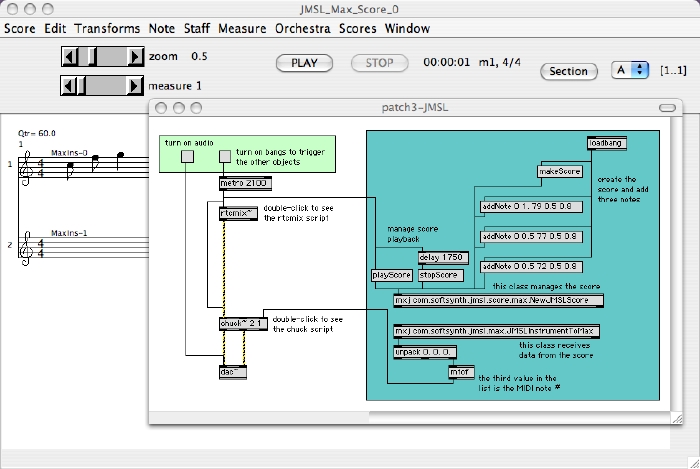

This external filter control turns out to be a really

good thing, because in a silly fit of

western compositional conscience I decide that I need to set the

filter center frequencies using Real LIve Musical Notation.

For this, I can use JMSL. JSML is a java-based music notation and

hierarchical scheduling language that has been ported to work in

the [mxj] context.

(patch3-JMSL.pat))

patch3-JMSL.pat

JMSL has many capabilities, and I

could have used it -- or any of these languages for that matter --

to control the performance evolution of the patch.

Instead I'm using a [metro]

object to cycle the JMSL note-sequence repeatedly and drive the

[rtcmix~] and [chuck~] objects. Simplicity is my goal.

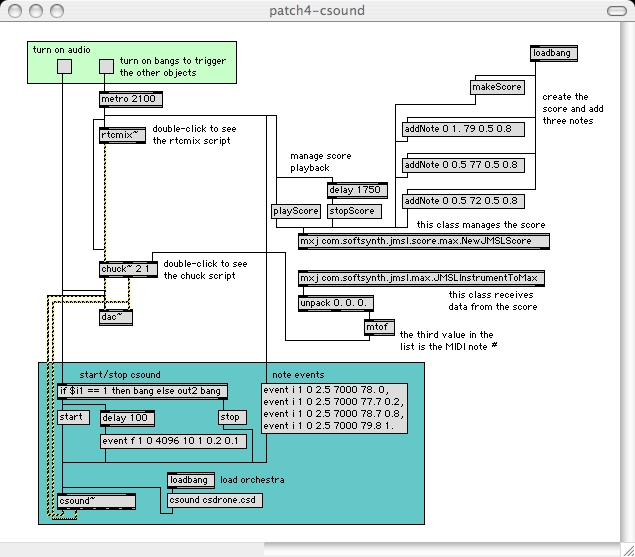

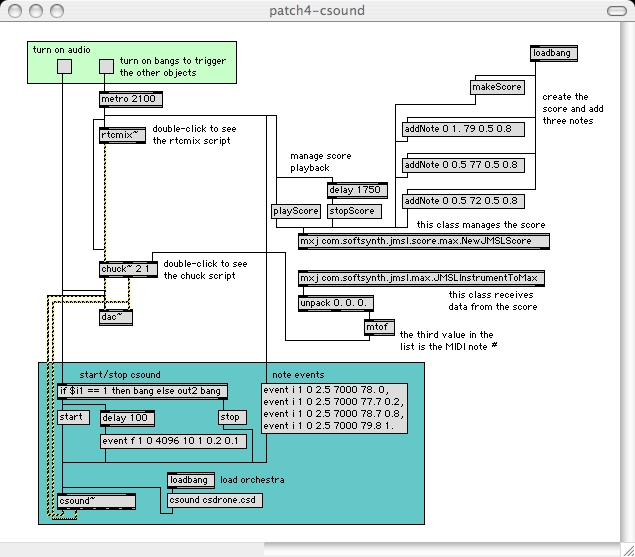

As fascinating as these nice, musically-filtered sawtooth

sounds are, my personal aesthetic is begging me to add a decent

droney bass sound. CSound to the rescue! It so happens that I

had already built a CSound orchestra to make a droney bass

sound. Including it in the growing patch is almost trivial

using the

[csound~]

object, although a bit of additional work is needed to set the

score up properly.

(patch4-csound.pat. The

csdrone.csd csound score/orchestra

file is included)

patch4-csound.pat

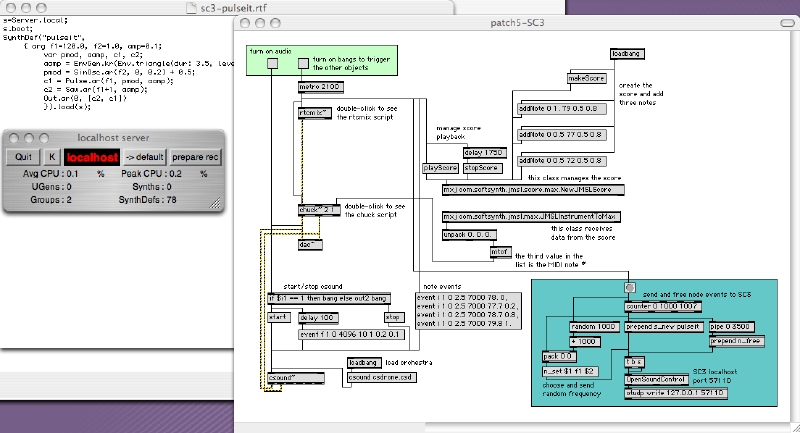

The icing on the cake for this mash of languages is the inclusion of

some high pitched pulse-width-modulation

sounds using the SuperCollider (SC3) language. SC3 is

different from the previous languages, in that it runs as a

separate application 'outside' Max/MSP. By employing the

Open Sound Control (OSC)

protocol used by SC3 for triggering scripts and

events, we can exercise coordinated control from within our Max/MSP

patch.

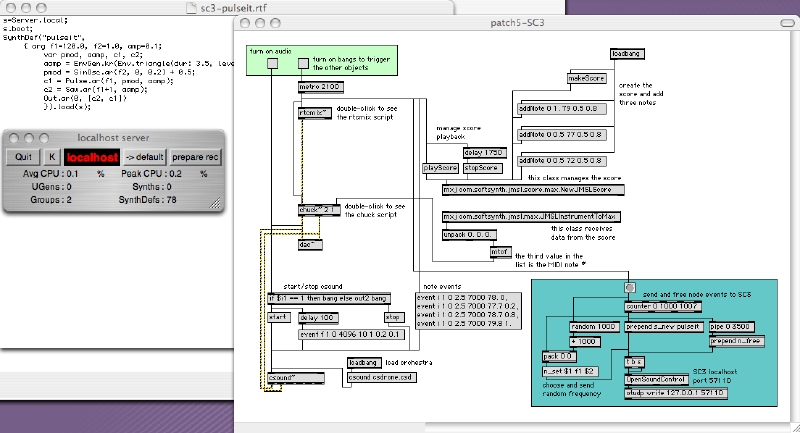

(patch5-SC3.pat. You will also

need this

sc3-pulseit.rtf

SC3 script loaded into SC3, selected and

interpreted properly to run the patch)

patch5-SC3.pat

The big drawback to the relative 'outside'-ness of the SC3 application

is that the audio output is being sent to the computer DACs through

a completely separate audio stream. What if I wanted to pipe

the SC3 audio through a happy ChucK filter? There is a way to

do this, but it does require configuration of the

Cycling '74 Soundflower

application using the companion Soundflowerbed.app

What I need to do is set up Soundflower/Soundflowerbed to route the

audio coming from SC3 into our Max/MSP patch. There are two ways

to do this, and I'm going to describe the "default" way.

SC3 normally writes audio to the plain-old two channel output of

the computer. We will use Soundflower/Soundflowerbed to reroute

this output into Max/MSP and choose one of the other Soundflower

outputs to audition the audio from our ever-growing Max/MSP patch.

Here's how:

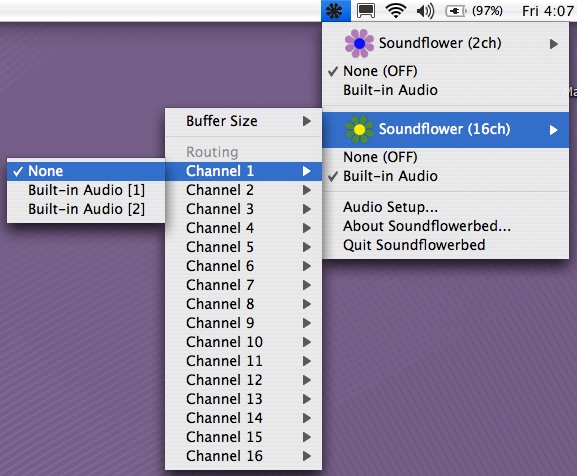

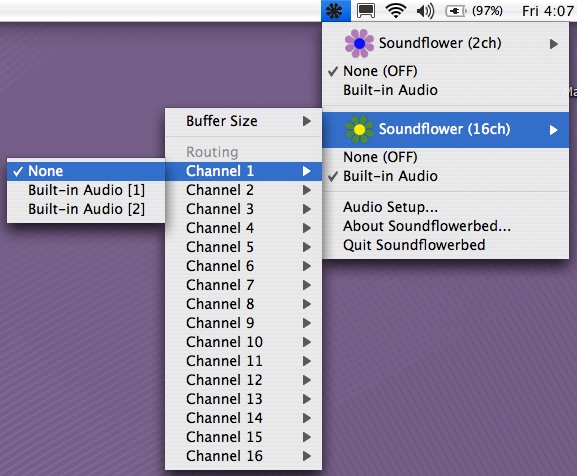

1. Start up the Soundflowerbed application. We are going to select

two of the 16 channels to be our "audio monitoring" output. Just

so we can remember easily, let's use the last two (channels 15 and 16)

to do this. From the

Soundflowerbed

drop-down menu under the

Soundflower (16 ch)

listing, be sure that channels 1-14 have None checked for their

routing:

Soundflowerbed

drop-down menu under the

Soundflower (16 ch)

listing, be sure that channels 1-14 have None checked for their

routing:

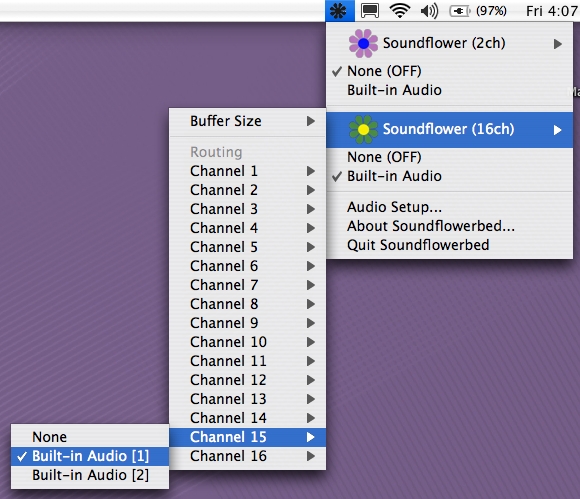

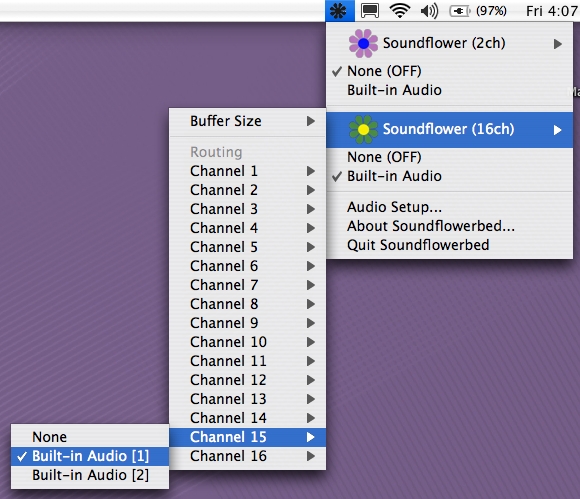

Set the routing for channels 15 and 16

to Built-in Audio [1] and Built-in Audio [2]:

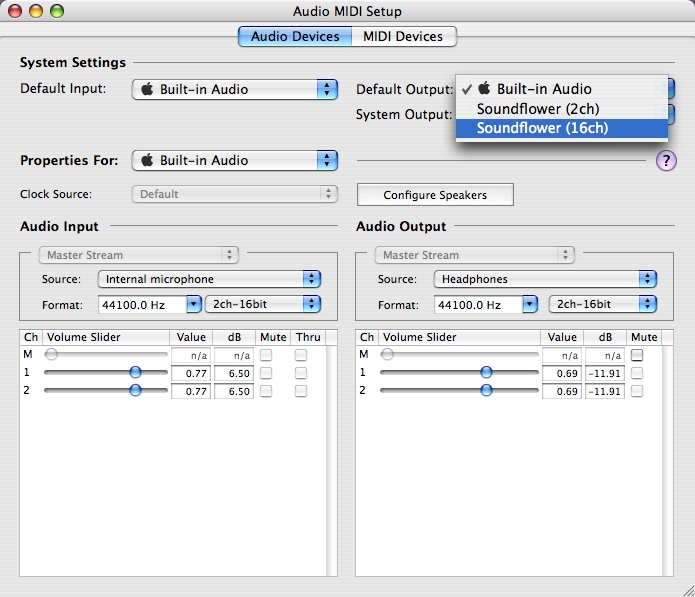

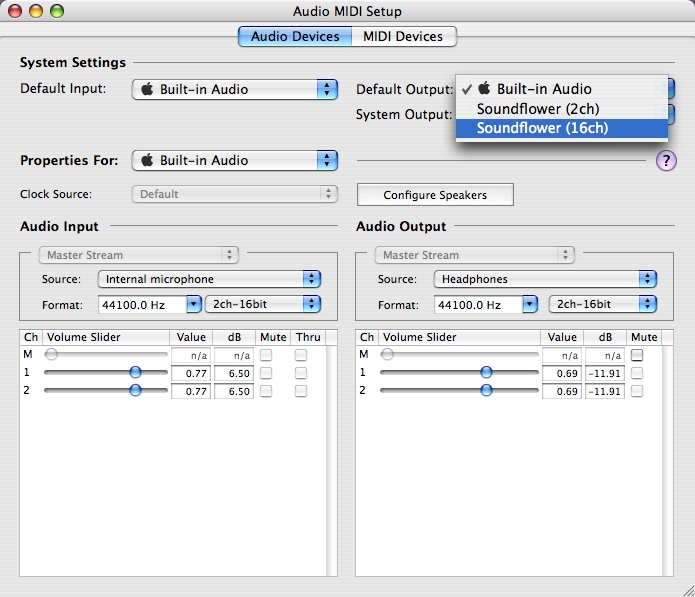

2. Next we want to set the default system audio output to go to

Soundflower. Select the Audio Setup... menu item

from Soundflowerbed. This will bring up the "Audio MIDI Setup"

Preference. Set the Default Output to Soundflower (16 ch):

[NOTE: Be careful -- once you set the "Audio MIDI Setup"

default to Soundflower (16 ch), it will affect other

applications. You will probably want to reset this to

Built-in Audio when you are finished.]

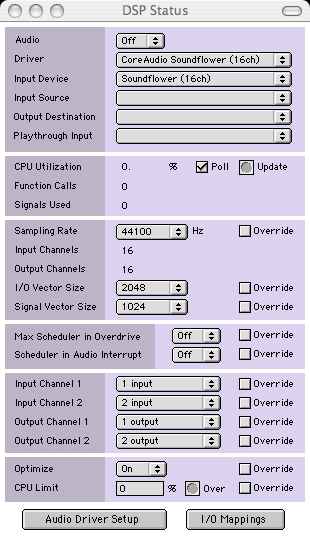

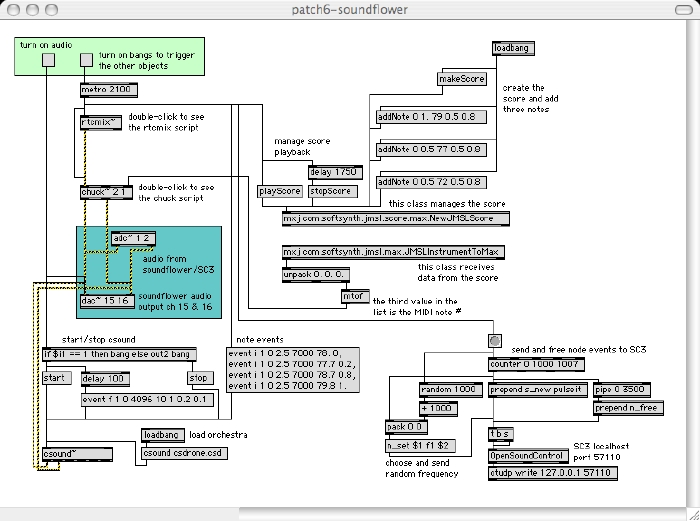

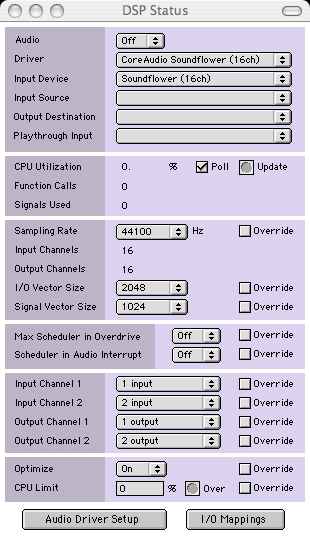

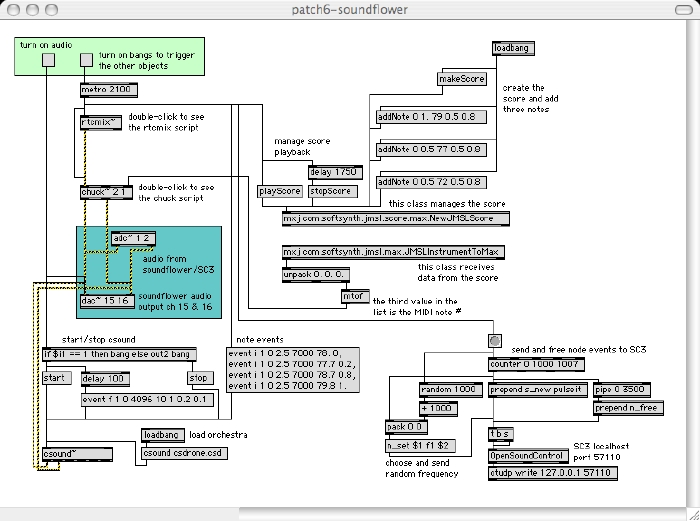

3. Now start the patch6-soundflower Max/MSP patch,

and use the DSP Status...

window (under the Options menu) to set the Driver and

the Input Device to

Soundflower (16ch):

At this point, audio

output from or original patch is disabled,

or at least not able to be heard.

4. To route the audio so that it can be heard, and to route

the default output of SC3 into our patch, I need to change the

patch so that I can send audio on [dac~] outputs 15 and

16 (the "monitored" outputs) and bring the SC3 audio into

the patch from [adc~] inputs 1 and 2 (the default SC3

channels). This is easy to do by specifying what channels

I want in the [dac~] and [adc~] objects.

(patch6-soundflower.pat)

patch6-soundflower.pat

At this point, start up SC3, read the script, fire up the Max/MSP

patch, and sound!

There are two points I should make about the SC3 routing. The first

is that SC3 is smart enough to take advantage of all the Soundflower

inputs and outputs. It is not necessary to use the default.

The SC3 output ugens

can be used to write to any of the Soundflower channels

directly.

The second point is slightly more subtle, but it

hints at an extraordinary world of possibility. The output of

any audio program can be routed into Max/MSP using

Soundflower. In performance, I have used the marvelous

SPEAR

analysis/resynthesis program as a performance

interface to "scrub" sound analyses and process the resulting

sound. Similarly, the output of the Max/MSP patch itself

can be sent elsewhere, perhaps directly to a program like

Digital Performer for recording or additional processing.

The only drawback is that any program without the ability to

address all the Soundflower channels (like SC3) will only be able

to write to the default channels 1 and 2. The combination of

several 'external' applications may wind up being mixed inadvertently.

Now SC3 audio is flowing through the patch! Yay! I

can filter it, I can mangle or munge it, I can mix it willy-nilly

with the rest of my sounds. But instead I'm going to make

one final addition to the patch, using a more general computer

language.

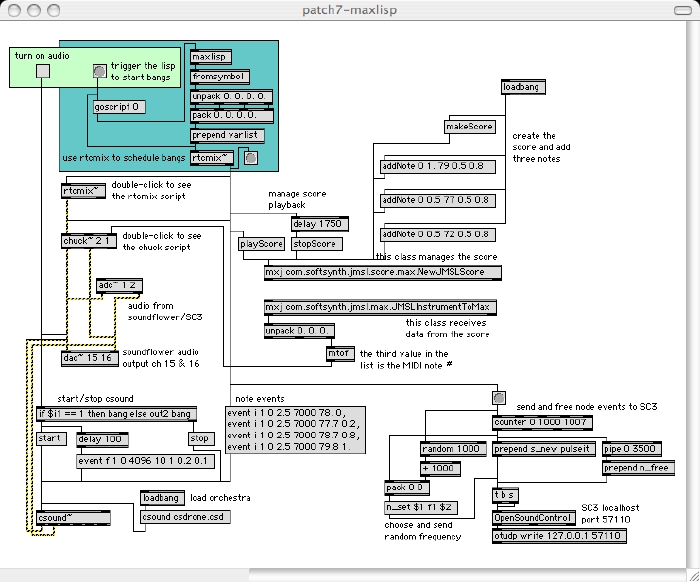

As happy as I have been with the [metro] controlling the

triggers of the different languages in the patch, I would much

prefer to use a fractal technique to determine starting points

for triggers. I'm just that kind of guy. Is it possible to

do this by concocting some bizarre dataflow combination of [metro]

[uzi], [if], or other esoteric set of 'standard'

Max/MSP objects? Perhaps, but it would take someone with

a brain radically different than my own to make it happen.

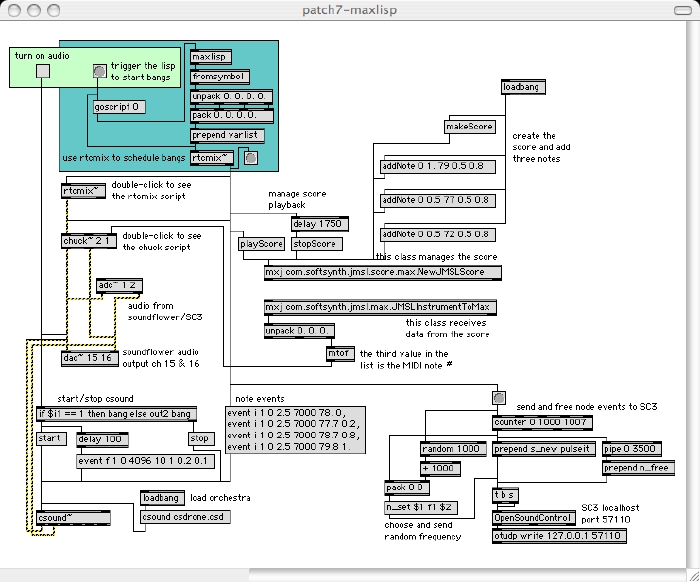

Instead, I'm going to turn to the ur-recursive language Lisp

to do the work in a few simple lines of code. The

[maxlisp]

object lets me do this. (see

patch7-maxlisp.pat,

the lisp code is in the file

fract.lisp)

patch7-maxlisp.pat

My work is done! Five separate computer-music languages, one

general-purpose (and also separate) language, all mished together

via Max/MSP into one cooperating mass of sound! And I haven't even

hinted at the thrills to be had by employing powerful but more

Max/MSP-friendly externals like Peter Castine's

Litter

package or Peter Elsea's

Lobjects.

And java! And javascript! Yikes!

The Final Observations

I'd like to make two final comments. The first is a plea to developers,

and the second is a goad to Max/MSP users. For developers: think

shared libraries and loadable bundles! The Soundflower routing for

SC3 above works, but I would much prefer to access SC3

functionality directly from

within Max/MSP. Or (for that matter) I wouldn't mind being able

to access all of Max/MSP within SC3. The basic idea is that I like

the tight-coupling that can be gained through the imbedding of one

application directly inside another. I've used network approaches

in other instances in the past, and although they work well for most

situations, the ability to integrate control very closely can

lead to much better standalone interfaces and applications.

I would love to see the day when bringing in an external

application is a simple matter of bundling it properly, finding

the right entry-points, and then using the application completely

inside an alternative development environment.

The prod to Max/MSP users: I have to bite my e-mailing tongue

on the Max/MSP mailing lists when I read people struggling to

work with the creation and accessing of something as simple

as indexed arrays of values. A small investment in learning

something like javascript or an even a simpler language like

RTcmix or ChucK with rudimentary data-structuring capabilities

would solve many of these problems in one or two lines of code.

Yes, lines of code. There are some things that a

dataflow approach just isn't all that good at doing (read your

Knuth). Why not take advantage of all the tools available?

And available they are! Most everything I've used above is

downloadable for free or for a very modest sum, and there are

many more packages ready for the asking/using. As an educator

intent on teaching a wide range of approaches -- I believe that

different languages do in fact structure our creative thinking

differently (but that's a whole 'nother article) -- the

ability of Max/MSP

to "glue" them all together is a real boon. More importantly,

the extended capabilities to which I know have access makes

composing and creating music downright enjoyable again.

All those sounds!

If you are interested, the original SPARK paper that I did not

present because of my snow-fear is

here.

But you've pretty much read it already.

Brad Garton

Professor of Music

Director, Columbia University Computer Music Center

http://music.columbia.edu/~brad

Here is an alphabetical listing of many of the objects and

languages discussed above: