"Throwing in a mouse" points to an aspect of computers that makes them rather unique as musical tools. The interface between myself and computer sound synthesis can be changed with minor hardware changes or (more significantly) by changing the software used to interpret my commands. I find this quite intriguing; being able to control the interface I use to create music gives me a very high-level "conceptual knob" that I can use to change the way I interact with my music making. I might choose to control sound production with a set of stochastic procedures, or I might use graphics software to interpret curves I draw in certain ways, or I might even write an algorithm to translate the words and letters of this paper into sound. In each case, the shape of the resulting piece (and the way I think about it as I produce it) will be different.

The Elthar program represents an explicit attempt on my part to come

to terms with this ability to design an interaction for composing.

Elthar is a natural language command interpreter for performing signal

processing tasks. To make the interaction between myself and Elthar

more interesting, I also implemented some ideas about learning and

memory organization in the program. This paper discusses some of the

technical aspects of Elthar and briefly describes some of the interactions

that developed as I used the program to produce the piece There's

no place like Home.

I decided to use this verbal interaction as the model for Elthar.

Several components of the model are immediately apparent:

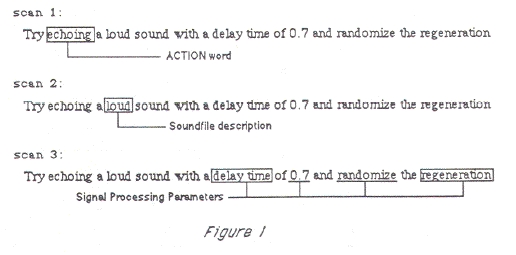

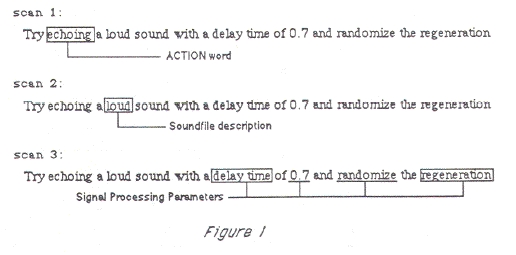

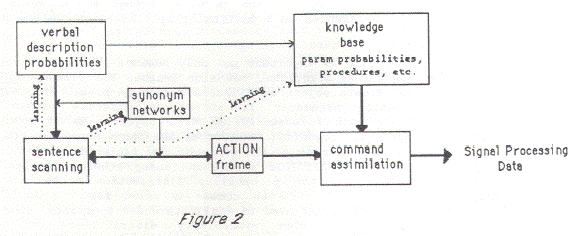

It is difficult to separate the procedures used by Elthar to parse the input sentence from the organization and operation of Elthar's knowledge-base. One of the primary reasons for this difficulty is that Elthar never generates a "complete" parse of the sentence. Elthar repeatedly scans the sentence (or fragments of the sentence) to extract the information contained within it. The action of these scans cause the "parsing" to resemble Schank's Conceptual Dependency parsing methodology (Schank and Abelson, 1977) coupled with Minsky's "frames" concept for organizing program procedures and data (Minsky, 1975).

The first scan done by Elthar attempts to ascertain the main "verb"

of the sentence (I refer to this as the ACTION word). Elthar scans

for a word by comparing each word of the sentence to words existing

in a group of "synonym networks", or lists of words with an identical

underlying meaning (or concept). The group of synonym networks being

searched during a particular scan is determined by the type of scan

being done. In the first scan, the group of synonym networks used

are all of the ACTIONs known to Elthar. For example, in figure 1 the

ACTION scan determines that the word "echoing" is a member of the

synonym network for the ACTION ECHO. This detection terminates the

scan and signals that an instance of an ECHO ACTION word has been

found.

Determining the input soundfile is important not only because the input filename is needed by the signal processing algorithm, but also because the filename/ACTION word combination is used as a data object in Elthar's knowledge-base. Information about every signal processing parameter is stored as discrete probability distributions associated with each filename/ACTION data object. As an example, suppose that Elthar had chosen the soundfile thunder as the input soundfile for the request of figure 1. After all scanning of the sentence had finished, Elthar would still be lacking a value for the AMPLITUDE parameter (among others) of the ECHO algorithm. Elthar would then use the probability distribution stored on the thunderECHO data object under AMPLITUDE to choose a value for the AMPLITUDE parameter. This is the mechanism Elthar uses to deal with under-specified requests. I decided to keep separate parameter probability distributions for each filename/ACTION object because the use of the signal processing algorithms depends heavily on what sound is being processed -- I would use a different set of parameters for a "loud" sound than I would for a "soft" sound to achieve a certain effect.

In the example of figure 1, Elthar had to choose an input soundfile

based on the description "loud". When scanning for information that

can be described verbally, Elthar calls upon another set of discrete

probability distributions to make choices. Each description word has

a set of probabilities associated with it denoting items described

by the word. The soundfile description "loud" might contain the following

list:

(.31 - thunder; .07 rain; .14 - wind; .48 - crash)

The probability distributions used to make choices from description words are learned and altered by my telling Elthar how an item is described. For instance, if I tell Elthar "The thunder soundfiIe is an ominous sound", then Elthar will either enhance the probability that the thunder soundfile will be chosen for the OMINOUS description (or whatever descriptive synonym network is represented by the word "ominous") or will construct a new description probability distribution for OMINOUS if none exists. This interaction can occur at any point during my conversation with Elthar and it can refer to any known item that may be understood by Elthar through verbal descriptions (usually soundfiles). Elthar will also understand negative descriptions ("The thunder is not very loud") and adjust the probabilities accordingly. New synonyms are also defined to Elthar in much the same way. I can say "The word strange means odd" or "Big means large" and Elthar will add the new synonym to all of the synonym networks that have the defining word as a member.

Elthar learns how to use the signal processing algorithms by "observing" what values I choose for algorithm parameters (or "randomly" chosen values, at my request) and soliciting my response to the operation. Suppose that Elthar had performed the action requested in the sentence of figure 1. Upon listening I decided that I didn't particularly care for my choice of delay time, but the value Elthar used for the regeneration seemed very appropriate for that soundfile. Suppose that the attack portion of the overall amplitude curve was also to my liking. Elthar would ask me "what I thought" of the sound it had synthesized. Responses such as "Save the regeneration value and the fade-in but not the delay time" or "I liked the fade-in and the regen factor" will cause Elthar to change the probability distributions for those parameters. This will then affect Elthar' s choices for those parameters when the knowledge-base is consulted in the future.

I realize that using subjective criteria (my "likes") and treating each parameter as an independent entity is quite problematic. My goal was to have Elthar "track" my thoughts about the sounds being processed without rote memorization. Elthar keeps separate probability distributions for every soundfile it knows, thus subtle variations in my treatment of different sounds will be reflected in the knowledge-base. One of the modifications I am planning to Elthar is to allow it to "introspectively" change its probability distributions. This introspection may take the form of simple numerical analyses (searching for correlations, simple linear or inverse relationships between parameters, etc.) or possibly more complex analyses of data trends and clustering such as those discussed by Michalski, et. al. (Michalski, 1983).

It takes some time for Elthar to develop detailed probability distributions for each soundfile. A solution to this problem is to have new soundfiles "inherit" data from existing soundfiles. When a new soundfile is introduced, Elthar asks what other soundfiles are "like" the new file. The interesting feature of this inheritance is that Elthar creates the new soundfile data through a linear combination of the probability distributions from the analogous sounds. Thus the new file has data that are unique, but at the same time contain salient characteristics of similar files. This simple analogy-forming technique proved to be very useful as my interaction with Elthar developed.

Elthar also has the ability to assimilate "scripts" into its knowledge-base

and recall them as commands in subsequent conversations. These scripts

are simply sentences grouped together under a single command name.

The sentences are interpreted sequentially as if they had been entered

at the terminal. Elthar does maintain some "conversational continuity"

throughout the duration of a script, allowing items such as the input

or output file to be implied in the sentences of a script rather than

explicitly referenced: Although this is more of a conversational context

than is maintained during "normal" interaction with Elthar, it is

not complete in any sense of the term. Variables or parameter values

cannot be passed

from one sentence to the next, nor is Elthar able to recall what

operations were done previously during the execution of a script.

Even with these handicaps, complex sequences of signal processing

operations can be easily defined and used by EIthar.

The sounds that resulted from these test scripts really caught my ear. They weren't at all what I had planned to do, but at the same time they had a fascinating beauty all their own. In attempting to decipher how Elthar had chosen the signal processing parameters used to create the sounds, I began to wonder how the knowledge had been derived for the soundfiles used as input to the debugging scripts. I had originally taken time to develop fairly extensive probability distributions for several soundfiles. All of the data for other files I was using were derived from these original files through Elthar's analogy-forming ability. This was done mainly in the interests of time (after all, I was only testing the program). It was much easier for me to tell Elthar what files a new soundfile was "like" rather than build new data each time I created a new sound -- after all, this was why I developed the analogy mechanism in the first place.

My discovery of the sounds resulting from the scripts caused me to think anew about how knowledge propagated through EIthar' s knowledge-base. The evolution of Elthar's knowledge as new soundfiles were added reminded me of the biological evolution of genetic information. I started to view soundfiles as "populations", the attendant signal processing parameter data being the "gene pool" surrounding the "populations". The scripts functioned as signal processing "environments" through which the soundfile populations evolved. I played the role of natural selection, rejecting mutant populations that didn't quite make the grade, and "selecting for" others whose sonic characteristics interested me.

This reconceptualization of Elthar changed my role in the interaction from "recording studio collaborator" to "experimenter in population biology". Elthar became the world in which I conducted my "experiments". I began to write scripts (environments) that would select for certain characteristics -- one script might foster the development of a large low frequency content, another would tend to produce short, choppy sounds with lots of reverberation. I wondered what would happen when two populations were combined and placed in a particular environment as opposed to each one evolving independently. I was able to design whatever evolutionary pathways I desired.

All of these "experiments" left audible results (the soundfiles).

There's no place like Home is simply a record of

the empirical results

of these tests. The evolutionary approach I adopted produced sounds

that flowed very naturally into each other. Although I did make a

number of compositional choices unrelated to the

"experimenting" while assembling the soundfiles into the piece, the

basic idea for the structure (and the actual sounds themselves!) were

a direct result of the interaction that developed between Elthar and

myself.

Michalski, R. S., Carbonell, J. G. and Mitchell, T. M. (editors). 1983. Machine Learning: An Artificial Intelligence Approach. Palo Alto, California: Tioga Publishing Company.

Minsky, M. 1975. "A Framework for Representing Knowledge." The Psychology of Computer Vision. Winston, P. H. (editor). New York: McGraw-Hill.

Schank, R. C. and Abelson, R. P. 1977. Scripts, Plans, Goals and Understanding. Hillsdale, New Jersey: Lawrence Erlbaum Associates.

Yavelow, C. 1986. "MIDI and the Apple Macintosh."

Computer Music Journal 10(3):11-47.